OpenAI Agent and Persistent-text-streaming with tool calls

Current Setup:

. streamChat is an HTTP action in Convex

. I have a unified_agent that needs to use Slack tools requiring Node.js runtime

. The agent needs to access conversation history and maintain context

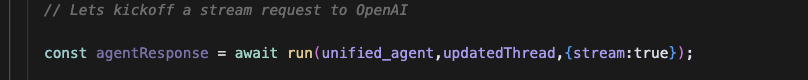

. I'm using the @openai/agents library's run() function

The Problem:

1. Runtime Mismatch: HTTP actions in Convex run in the V8 runtime, but my Slack tools need Node.js runtime

2. Agent Integration: The unified_agent requires Node.js tools, but I'm calling it from an HTTP action

3. Context Preservation: I need to maintain conversation history while allowing the agent to use external tools

Specific Questions:

1. How can I structure this so that the HTTP action can trigger an agent that uses Node.js runtime tools?

2. What's the best pattern for combining V8 and Node.js runtimes in Convex when using agents with external tool dependencies?

3. Should I split this into separate actions/queries, or is there a way to bridge the runtime gap within the agent workflow?