Network latency Pro vs Free

While testing we experienced some sluggishness in regards to network response times on our end and shrugged it off as: Free tier now, we will go Pro anyway

Summa summarum: We upgraded and hoped to see some improvements. Our expectation was dev deployments are being allotted less resources and prod will most likely be fine. Nothing changed though. Even the simplest queries where we retrieve a doc directly by

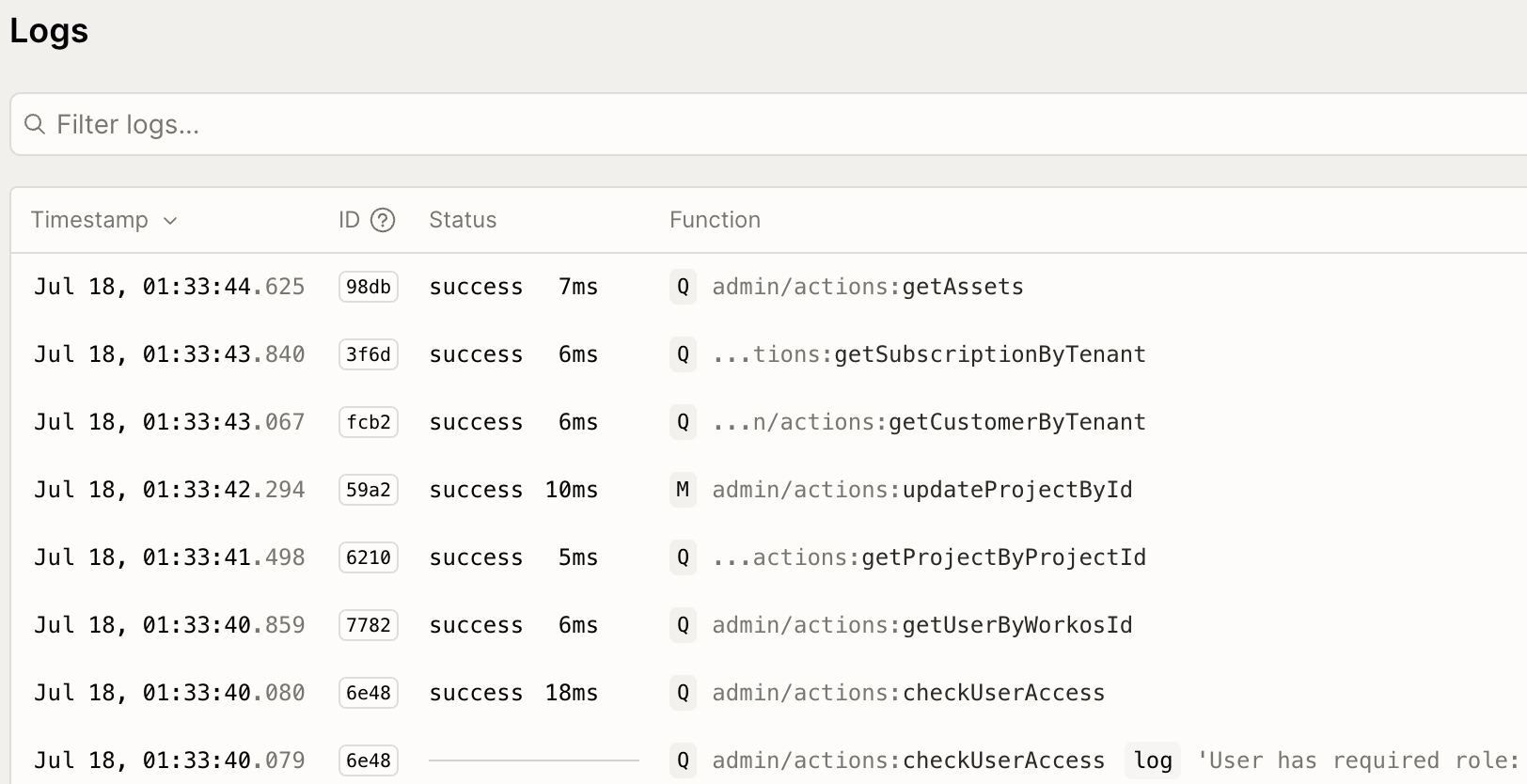

idI created a simple testbench which calls some functions of ours and timed the query/mutation/action calls and get the following result:

The associated execution times are shown in the provided screenshot.

This is in

prodConvexClientI will continue in my next message.